In June 2019, DIME hosted the fifth annual Manage Successful Impact Evaluations course. New additions for the 2019 course included hands on sessions, where participants had a chance to apply the lectures’ content to real-life impact evaluation scenarios, and a career panel, where former DIME field coordinators shared their experiences on moving on to different career paths after working in the field. Instructors included staff from DECIE, DECDG, SurveyCTO (a computer-assisted survey software firm), and a guest lecturer from the Center for Global Development. Presentations and training materials from the course are made publicly available through GitHub.

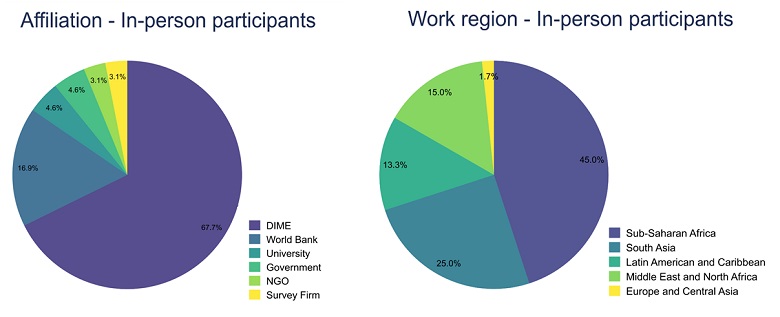

66 people participated in the course in-person in Washington, DC. Most participants were World Bank staff (83%). The remaining participants came from universities or research institutes (George Washington University, Stanford University, Eastern Mennonite University), governments (including public officials from Cabo Verde, Turkey, and Argentina), NGOs (e.g. Atlas Corps, Treasureland Initiative), and survey firms.

205 people registered to participate in the course via WebEx. A quarter of the remote participants work at universities or research institutes (e.g. Georgetown University, University of Chicago, Michigan State University, The State University of Zanzibar, University of Limpopo). 23% work in the private sector, including survey firms, consultancy firms (Deloitte, PwC, KMPG), companies such as Gray Matters India, and others. One fifth of participants are in NGOs, e.g. Save the Children, VillageReach, Atlas Corps, and TradeMark East Africa. Government officials from 12 countries (including the US, Turkey, Somalia and Peru), and United Nations agencies (WHO, WFP, UNICEF) make up around 8% of participants each. The remainder come from multilateral development banks (World Bank, the Inter-American Development Bank and the Asian Development Bank), and donors and aid agencies (DFID, GIZ, EU). 28 of the remote participants followed more than half of the five-day course, and 18 of them attended all remote sessions.

Course participants work in a variety of regions. Most work in Sub-Saharan Africa (45% of in-person and 31% of remote), South Asia (25% of in-person and 10% of remote), or Latin America (13% of in person, 4% of remote). Among remote participants, the most common work region is North America (37%).

In-person participants took a knowledge test at the beginning and end of the course, to measure learning outcomes. The test had 16 questions on technical topics addressed in the training, such as protocols for survey piloting and back-checks, how to select enumerators, what constitutes personal identifiers in data, the effect of take-up on statistical power, and questions on programming in Stata. We see substantial and statistically significant knowledge gains: average test scores increased by 56%. Considering the sub-categories in the test, we see the largest gains in impact evaluation methods (scores more than doubled).

Participants found the sessions to be highly relevant for their work, and well-delivered. All courses were perceived to be well or very well presented, both in-person and on WebEx.